FPGA System for ML Optimized Image Processing in Irrigation Analysis

Table of Contents

Understanding the Need for Smarter Irrigation

Water is one of the most valuable resources in agriculture. The balance between overwatering and under-watering directly affects crop yield, soil health, and long-term sustainability. While traditional irrigation methods rely on broad environmental factors like weather conditions and soil moisture sensors, they often fail to capture real-time plant stress at an individual level.

One of the most precise ways to measure a plant’s water stress is through plant water potential, which is an indicator of how much pressure the plant is experiencing due to water deficiency. The Scholander pressure chamber is a well-known method used to determine this value. By cutting a leaf and placing it inside a sealed chamber where pressure is gradually increased, the water potential can be measured when water starts to exude from the leaf petiole.

The challenge, however, is that the amount of water released is minuscule, requiring highly sensitive instruments to measure. Many existing commercial solutions are expensive and require manual observation, making them inaccessible for small-scale farmers. My project aimed to develop an automated, cost-effective system that integrates FPGA-based image processing and machine learning to improve measurement precision, reduce manual intervention, and enable better irrigation planning.

Designing an Automated System for Plant Water Potential Measurement

The initial design aimed to capture droplet formation from the petiole in the Scholander pressure chamber and process it in real-time using an FPGA. The hardware setup included:

- A Scholander pressure chamber to create a controlled environment for water potential measurement.

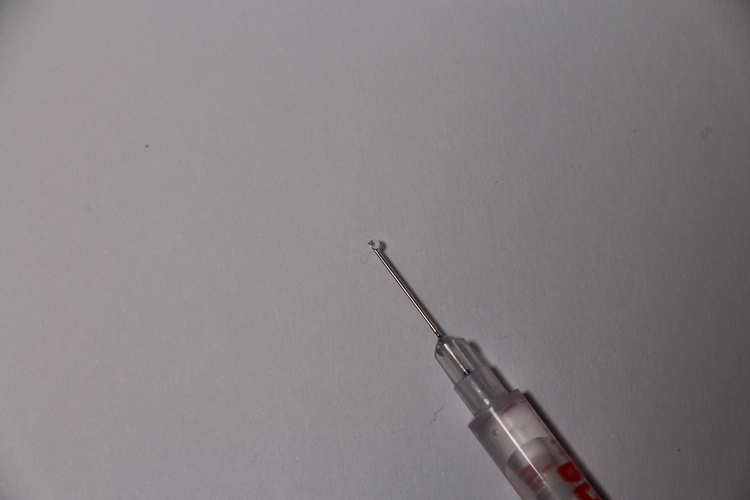

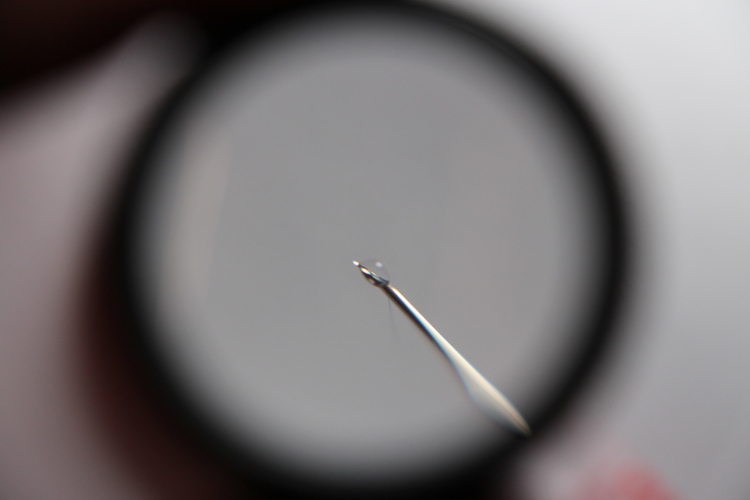

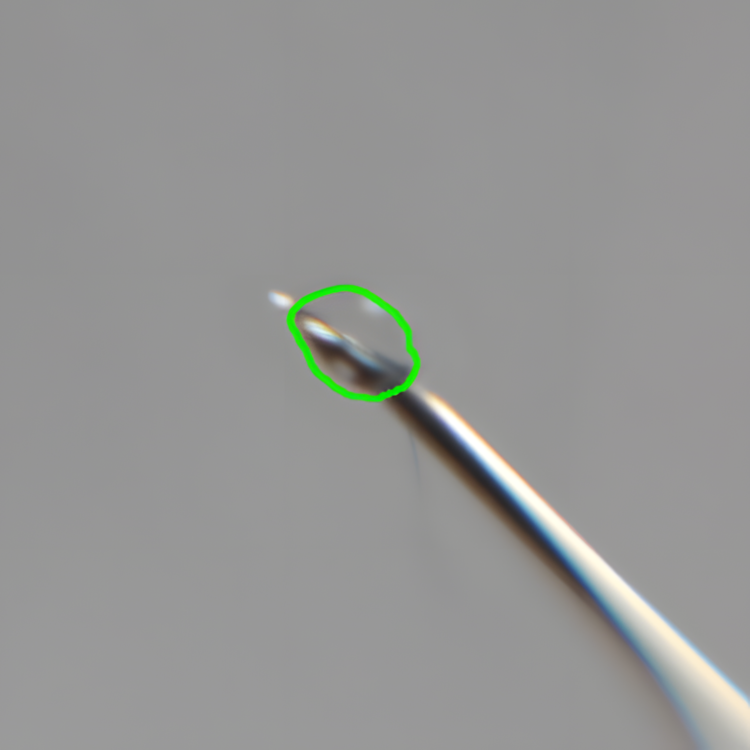

- A Raspberry Pi Camera Module with an RMS microscope lens to capture high-resolution images of the droplet.

- An FPGA board (Gowin Tang Nano) for real-time image processing and edge detection.

- A Raspberry Pi for dashboard visualization, cloud connectivity, and remote monitoring.

- A cloud-based data logging system for long-term trend analysis.

The system was designed to detect when the droplet formed, calculate its volume, and correlate it with the applied pressure to determine plant water potential. The initial assumption was that classical edge detection techniques would be sufficient for this task.

Challenges Encountered During Real-World Deployment

Once the system was deployed in real-world conditions, several unexpected challenges emerged:

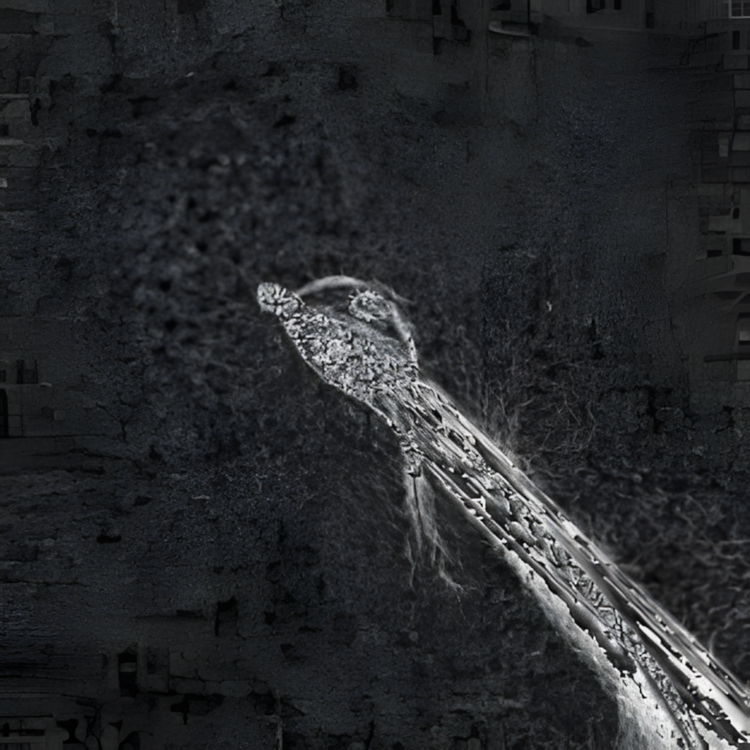

- Inconsistent Droplet Detection – The droplet formation process was difficult to capture accurately due to its small size and rapid evaporation.

- Lighting Variability – Natural lighting conditions caused reflections and inconsistent contrast in images, leading to unreliable edge detection.

- Noise from the Petiole Cut Surface – The freshly cut petiole often had microstructures that created false edges in traditional computer vision methods.

- Environmental Factors – Variations in temperature and humidity affected the formation and visibility of the droplet, complicating measurement.

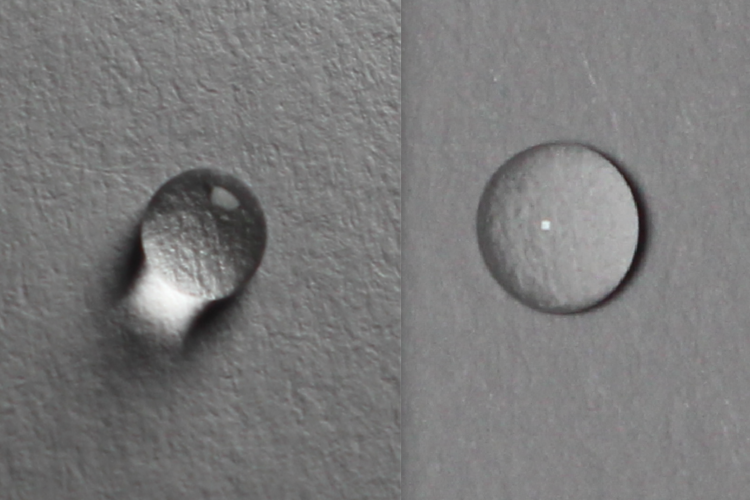

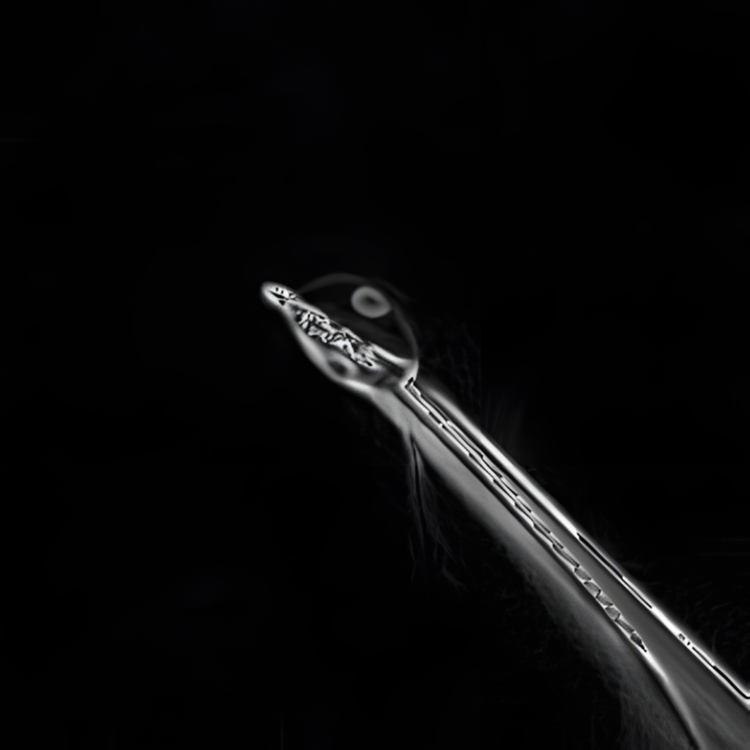

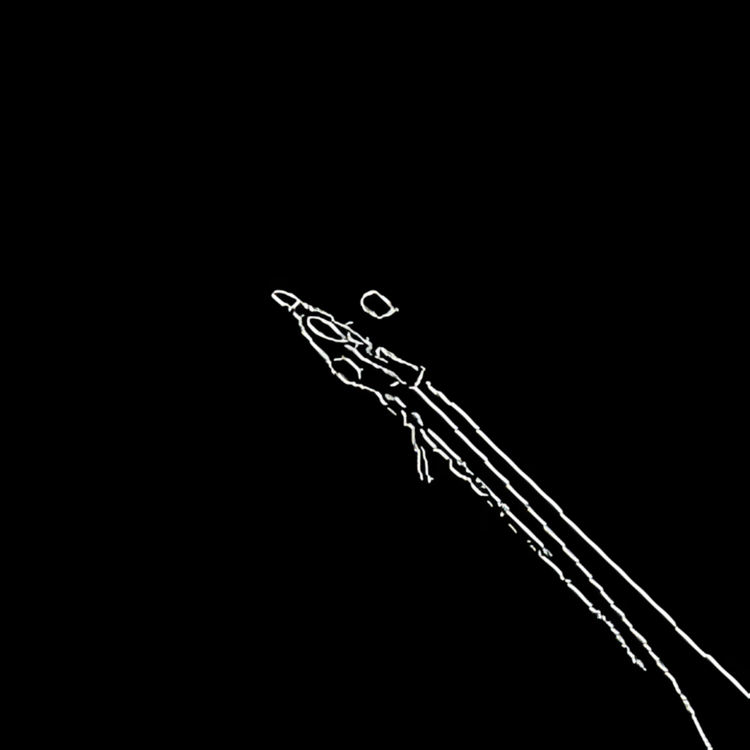

Initial tests showed that the Sobel and Canny edge detection algorithms were unreliable due to the above factors. The system needed a more robust approach to distinguish actual droplet formation from noise.

Enhancing Detection Accuracy with Machine Learning

To address these challenges, I introduced a machine learning-based segmentation approach to improve droplet volume detection. This included the following improvements:

1. Contrast Enhancement and Adaptive Thresholding

Instead of using a fixed threshold, Contrast Limited Adaptive Histogram Equalization (CLAHE) was applied to enhance contrast locally. This significantly improved edge clarity in different lighting conditions.

2. Machine Learning for Droplet Segmentation

Traditional edge detection was insufficient, so I trained a machine learning model to segment droplets more accurately:

- Training Data: Manually labeled images of droplets vs. non-droplets were collected.

- Feature Extraction: The model learned to differentiate between real droplets and false positives from petiole markings.

- Geometric Constraints: To improve accuracy, additional heuristics were implemented:

- The detected shape needed to be convex and nearly circular to be considered a droplet.

- A center-line constraint ensured that the detected edges cut the middle of the image exactly twice, reinforcing the droplet’s expected geometry.

3. Incorporating Weather and Soil Data for Long-Term Predictions

In addition to real-time analysis, the system was expanded to incorporate:

- Historical weather data for trend analysis.

- Real-time weather forecasts to predict upcoming irrigation needs.

- Soil analysis data to adjust irrigation recommendations based on moisture retention capacity.

- Time-series regression models to optimize scheduling based on climate trends.

These enhancements enabled the system to not only provide real-time measurements but also generate plant-type-specific irrigation recommendations tailored to environmental conditions.

Performance Gains and System Improvements

The implementation of machine learning and adaptive preprocessing led to significant improvements in accuracy and reliability:

| Feature | Initial Version | Optimized Version |

|---|---|---|

| Edge Detection | Sobel/Canny | ML-Enhanced + Geometric Constraints |

| Lighting Sensitivity | High (Manual Calibration) | Adaptive Contrast Adjustment |

| Volume Detection | Manual Weighing | Automated Image-Based Calculation |

| Scheduling Data | Pressure Logs Only | Integrated with Climate & Soil Data |

The final system was more resilient to lighting variations, minimized false detections, and improved overall measurement precision.

Expanding the System for Long-Term Agricultural Insights

Beyond real-time droplet analysis, the system was expanded to integrate historical weather patterns, soil conditions, and seasonal trends to improve long-term irrigation planning. This provided predictive scheduling tailored to specific crops, ensuring that irrigation decisions were based on both real-time measurements and long-term environmental factors.

Potential future enhancements include:

- Automated model retraining to improve segmentation accuracy with more data.

- Integration with additional sensors (humidity, temperature, soil moisture) for better irrigation recommendations.

- Expansion of cloud-based analytics to enable larger-scale precision agriculture solutions.

Lessons Learned and Next Steps

This project reinforced several key lessons:

- Real-world testing is essential – Lab conditions rarely match field conditions, and iterative improvements based on real-world deployment are crucial.

- Machine learning and traditional methods can complement each other – ML helped compensate for the limitations of classical edge detection.

- Simple design changes can drastically improve accuracy – Adding a center-line constraint for droplet validation significantly reduced false positives.

Future work will focus on expanding the dataset, improving model efficiency, and integrating additional agricultural insights to refine the system further.

Conclusion

By leveraging FPGA-based image processing and machine learning, this system enables a cost-effective, automated approach to plant water potential measurement. The combination of real-time monitoring, predictive analytics, and environmental data integration provides a powerful tool for optimizing irrigation in precision agriculture.

With continued improvements, this approach has the potential to make data-driven irrigation accessible to small-scale farmers, reducing water waste and increasing crop yield in a sustainable manner.